The advent of new computational techniques based on so-called ‘artificial intelligence’ has led to the development of a new type of imaging system. Generative image systems can create photo-realistic images based on prompts from users such as ‘a grey squirrel on a sunflower eating seeds’. These systems will impact photography in a number of ways and pose huge challenges for commercial photographers as images used in illustration, such as furniture or fashion images, can be generated quickly and cheaply with minimal human intervention.

To illustrate what these systems can do, I decided to experiment with one of them (Adobe Firefly) and to see how well it would do in replicating photographs I had already taken. I chose images from three different genres - nature, landscape and street photography.

My first attempt was based on a photograph of a grey squirrel that climbed a sunflower in my garden to get at the seeds.

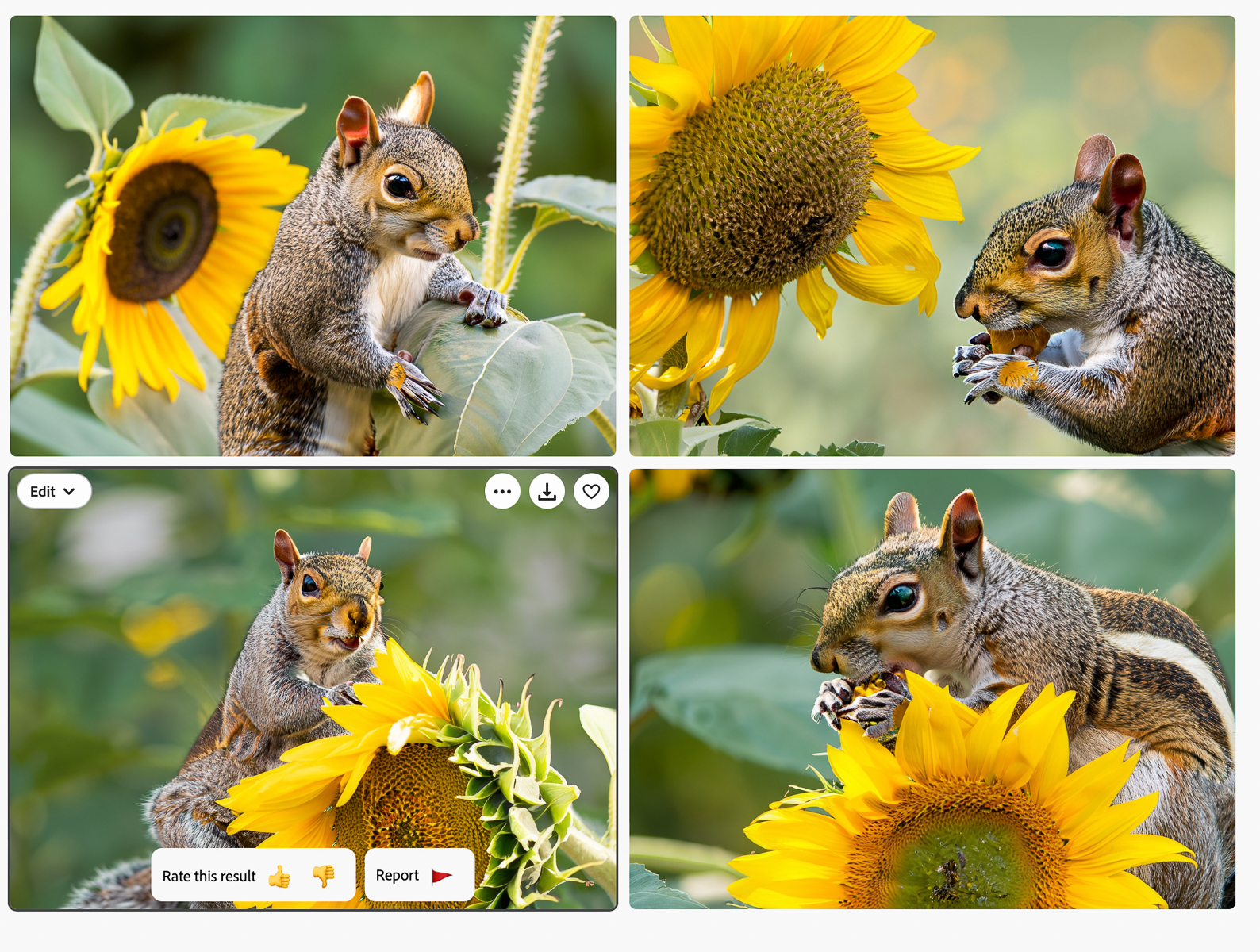

The prompt that I used to generate the images on Firefly was ‘ Gray squirrel on sunflower eating seeds’ and this generated the four options shown in Image 2.

In this case, I think the image generator has done a credible job in creating squirrel images.They look a bit unnatural (image generators often have problems with hands) compared to the photographic image. It’s obvious that, with only a little improvement, they could substitute for a photographic image.

My second attempt was based on a ‘street’ photography image of two men talking that I took at a Holyrood garden party.

The prompt that I used to generate the images was ‘two older men, one in kilt and one in tail suit, talking to each other when attending garden party in Edinburgh with crowd and marquee in background’ (Image 4).

The results were really quite bizarre and bore no resemblance whatsoever to my original image. I suspect that the system didn’t understand ‘tail suit’ (perhaps it is called something else in American) and, in one image, the men are not talking to each other. The mode of dress is quite odd (although, to be honest, this was sometimes true of the real people at the garden party!).

My final attempt was a landscape photograph taken from the summit of Arthur’s Seat (Image 5).

The Firefly prompt that I used was ‘Late autumn view of Edinburgh city and castle from the summit of Arthur’s Seat with Salisbury Crags in foreground’. Image 6 shows the results.

The results here are clearly a miserable failure bearing no resemblance whatsoever to the actual scene.

My conclusions from these experiments and others is that these systems are most effective in generating relatively simple images with clearly specified objects, such as squirrels and sunflowers. Their operation is based on statistical analysis so they don’t have a sense of place - they therefore only understand the generic notion of a castle and not the specific notion of ‘Edinburgh castle’ed. However, we must remember that these systems are at an early stage of development and are improving rapidly. More contextual knowledge is an obvious improvement and I would expect to see this in the relatively near future.

Does all of this matter to amateur photographers? It does if you are interested in entering or organising photographic competitions or exhibition. Clearly, we are interested in taking rather than generating images so, in general, we don’t have to worry about completely generated images being entered into photographic competitions.

However, the same technology is now being incorporated into Photoshop, Lightroom and other editing tools and this raises a quite fundamental question ‘When does an image that has been edited and which includes generated elements stop being an original photograph?’. This can lead to a large number of subsidiary questions which I won’t go into here but, suffice to say, there are no easy answers to the ethical questions posed by generative AI technology.

I see generative Ai systems as a new form of digital art. Just as photography replaced some types of drawing and painting, they will be used to replace some types of photographs. For photographers, they offer an opportunity for people with vision but with limited editing skills to alter images in ways that have, up till now, only been available to Photoshop experts and to improve images with technical flaws. To me, they are an opportunity rather than a threat but I recognise that they do pose very difficult questions for photographic competitions.

If you are interested in learning more about AI and photography, I have created a page for Edinburgh Photographic Society with links to various articles and opinions. Suggestions and contributions to this page are very welcome.